EverMind AI Sets the New Standard of RAG with EverMemReRank

EverMind researchers

Published at October 24, 2025

About 1 minutes to read

#SOTA

#2wiki

#Hotpotqa

#RAG

Part of the EverMemModel modules, the ReRankModel achieves SOTA performance on 2wiki and Hotpotqa.

We have integrated a generative ReRankModel into the traditional RAG retrieval framework. By this week, we find it on the right track of performance improvement on key benchmarks.

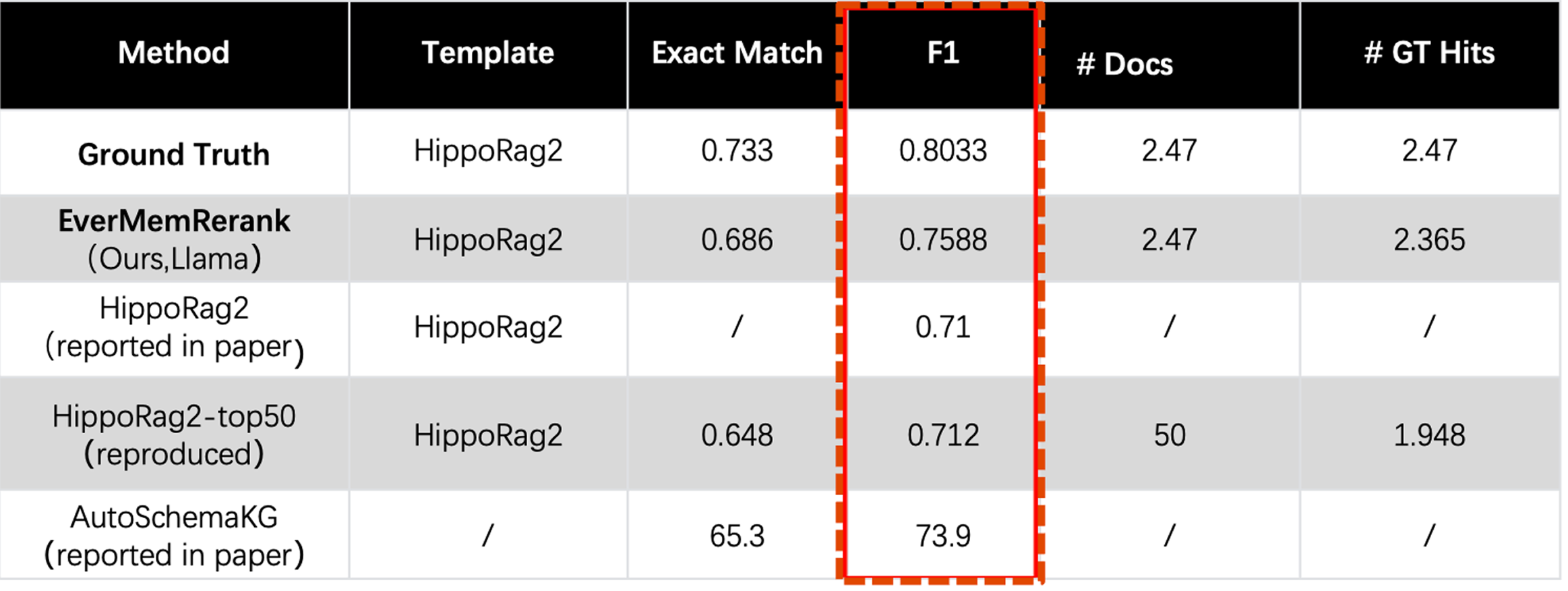

Performance on 2wiki benchmark

When compared with HippoRag2 under identical conditions using Llama3.3 as the LLM for QA, our method achieved a QA F1 score of 0.758 on the 2wikimultihopqa public benchmark, outperforming HippoRag2 by 4.8 percentage points and reaching SOTA level.

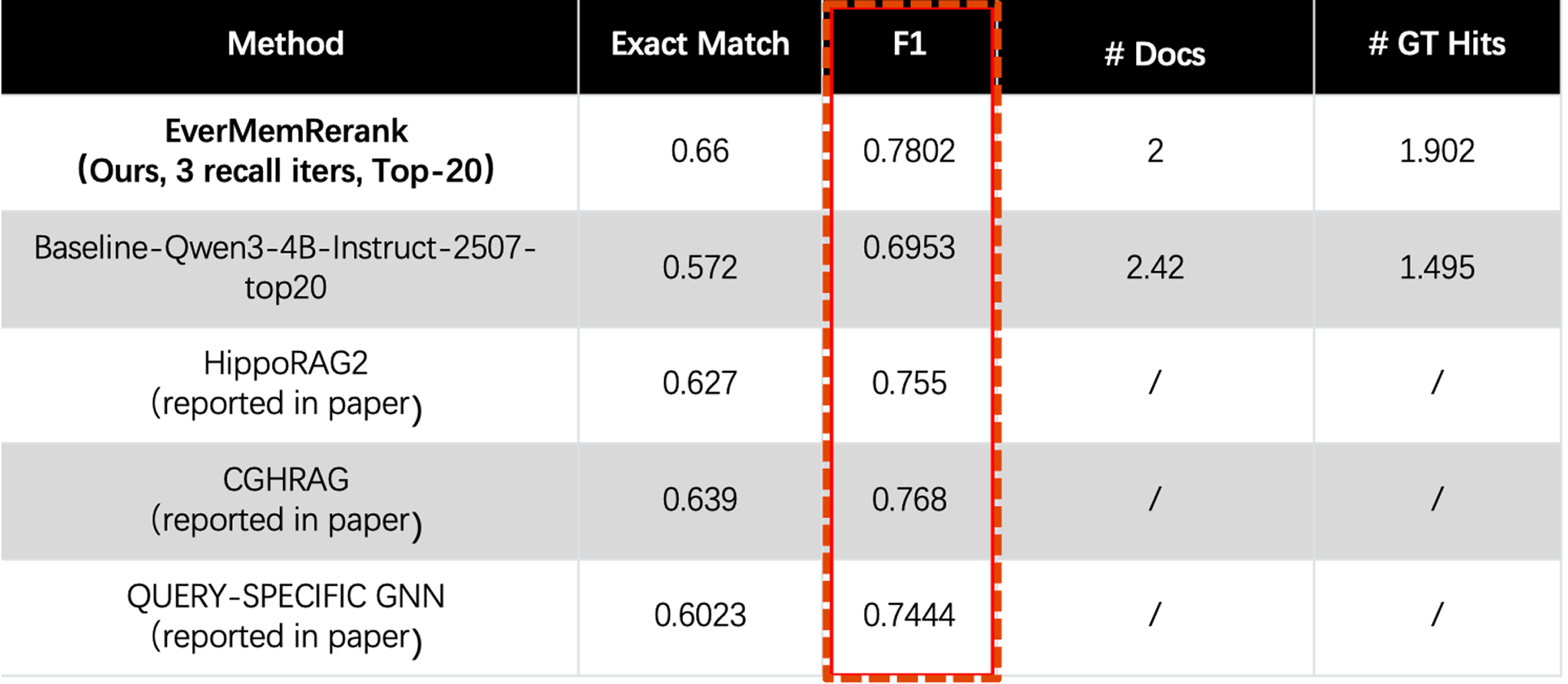

Performance on Hotpotqa benchmark

On the HotpotQA public leaderboard, our model achieves an F1 score of 0.7802, outperforming HippoRag2’s 0.755 by 2.5 percentage points and reaching SOTA level.